You are currently browsing the category archive for the ‘Problem of the Week (POW)’ category.

Huh — no solutions to POW-13 came in! I guess I was surprised by that.

Ah well, that’s okay. The problem wasn’t exactly trivial; there are some fairly deep and interesting things going on in that problem that I’d like to share now. First off, let me say that the problem comes from The Unapologetic Mathematician, the blog written by John Armstrong, who posted the problem back in March this year. He in turn had gotten the problem from Noam Elkies, who kindly responded to some email I wrote and had some pretty insightful things to say.

In lieu of a reader solution, I’ll give John’s solution first, and then mine, and then touch on some of the things Noam related in his emails. But before we do so, let me paraphrase what John wrote at the end of his post:

Here’s a non-example. Pick

points

and so on up to

. Pick

points

,

,

, and so on up to

. In this case we have

blocking points at

,

, and so on by half-integers up to

. Of course this solution doesn’t count because the first

points lie on a line as do the

points that follow, which violates the collinearity condition of the problem.

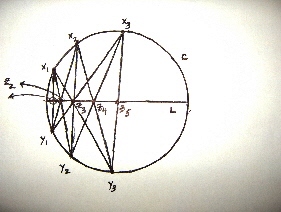

Here’s a picture of that scenario when :

Does this configuration remind you of anything? Did somebody say “Pappus’s theorem“? Good. Hold that thought please.

Okay, in the non-example the first points were on two lines, which is disallowed. Now the two lines here,

and

, form a degenerate conic

. Thinking in good algebraic geometry fashion, perhaps we can modify the non-solution by replacing the degenerate conic by an honest (smooth) nondegenerate conic, like an ellipse or something, so that at most two of the

points are on any given line. This should put one in the mood for our first solution.

John Armstrong writes: Basically, I took the non-example and imagined bending back the two lines to satisfy the collinearity (pair-of-lines = degenerate conic, so non-example is degenerate example). The obvious pair of curves to use is the two branches of a hyperbola. But hyperbolas can be hard to work with, so I decided to do a projective transformation to turn it into a parabola.

The line itself is . Which has the obvious

-intercept

. Now we need to pick a lot of

and

values to get repeated products. Place

points at

,

,

,

, and so on above the negative powers of two. Place

points at

,

,

,

, and so on above the positive powers of two. The blocking points are then at

,

,

,

, and so on up the

-axis by powers of two. Presto!

Very nice. My own solution was less explicit in the sense that I didn’t actually write down coordinates of points, but gave instead a general recipe which relies instead on the geometry of conics, in fact on a generalization of Pappus’s theorem known to me as “Pascal’s mystic hexagon“. I first learned about this from a wonderful book:

- C. Herbert Clemens, A Scrapbook of Complex Curve Theory (2nd Edition), Graduate Studies in Mathematics 55, AMS (2002).

Pascal’s Mystic Hexagon, version A: Consider any hexagon inscribed in a conic, with vertices marked . For

, mark the intersection of the lines

by

. Then

are collinear. (The reason for the strange indexing will be clear in a moment.)

Pascal’s Mystic Hexagon, version B: Consider any pentagon inscribed in a conic , with vertices marked

. Choose any line

through

, and define

and

. Then the intersection

is the sixth point of a hexagon inscribed in

.

The following solution uses version B.

Solution by Todd and Vishal: For the sake of explicitness, let the conic be a circle

and let the line (segment)

be the diameter along

. Choose two points

on

above

and a point

on

below

. The remaining points are determined recursively by a zig-zag procedure, where at each stage

is the intersection

, where

, where

, and

. We will show the blocking condition is satisfied: for all

, the point

is on the line

.

To get started, notice that the blocking condition is trivially satisfied up to the point where we construct . Mystic Hexagon B ensures that

, as defined above, is blocked from

by

and from

by

. Then define

as above. So far the blocking condition is satisfied.

Suppose the blocking condition is satisfied for the points . Define

, as above. Then Mystic Hexagon B, applied to the pentagon consisting of points

, shows that

is blocked from

by

and from

by

.

This shows that could have been defined to be

. Then Mystic Hexagon B, applied to the pentagon

, shows that

is blocked from

by

and from

by

. This shows

could have been defined to be

.

And so on up the inductive ladder: for , defining

, Hexagon B applied to the pentagon

shows that

could have been defined to be

. This shows the blocking condition is satisfied for

,

. We block

from

by defining

as above, to extend up to

.

Then, as prescribed above, we define , and repeat the preceding argument mutatis mutandis (interchanging

‘s and

‘s), to obtain the blocking conditions up to

,

. This completes the proof.

What this argument shows (with Hexagon B doing all the heavy lifting) is that no cleverness whatsoever is required to construct the desired points: starting with any nondegenerate conic , any secant line

, and any three initial points

on

to get started (with

‘s on one side of

and

on the other), the whole construction is completely forced and works no matter what!

Which may lead one to ask: what is behind this “miracle” called Pascal’s Mystic Hexagon? Actually, not that much! Let me give a seat-of-the-pants argument for why one might expect it to hold, and then give a somewhat more respectable argument.

Define a planar cubic to be the locus of any degree 3 polynomial

in two variables. (We should actually be doing this as projective geometry, so I ought to write

where

is homogeneous of degree 3, but I think I’ll skip that.) For example, the union of three distinct lines is a cubic where

is a product of three degree 1 polynomials. What is the dimension of the space of planar cubics? A cubic polynomial

,

has 10 coefficients. But the equation is equivalent to the equation

for any nonzero scalar

; modding out by scalars, there are 9 degrees of freedom in the space of cubics. What is the dimension of the space of cubics passing through a given point

? The condition

gives one linear equation on the coefficients

, so we cut down a degree of freedom, and the dimension would be 8. Similarly, if we ask for the dimension of cubics passing through 8 given points, we get a system of eight linear equations, and we cut down by eight degrees of freedom: in general, the space of cubics through 8 points is “expected” to be (is “almost always”) 1-dimensional, in fact, a (projective) line.

In the configuration for Pascal’s Mystic Hexagon, version A

we see three cubics passing through the 8 points , namely:

where the conic

is defined by a degree 2 polynomial

Since we expect that the space of cubics through these eight points is a line, we should have a linear relationship between the cubic polynomials used respectively to define

, and

above, hence we would get

for some scalars . Thus, if a ninth point

is in

and

, so that

, then

. Thus

lies in

as well, and if

isn’t on

, it must be on the line

. Thus, “generically” we expect

to be collinear, whence Hexagon A.

This rough argument isn’t too far removed from a slightly more rigorous one. There’s a general result in projective algebraic geometry called Bézout’s theorem, which says that a degree planar curve and a degree

planar curve either intersect in

points (if you count them right, “with multiplicity”) or they have a whole curve component in common. (Fine print: to make this generally true, you have to work in the projective plane, and you have to work over an algebraically closed field.) A much weaker result which removes all the fine print is that a degree

curve and a degree

curve either have a curve component in common, or they intersect in at most

points. In particular, in the notation above, the cubics

and

intersect in 9 points, 6 of which are on the conic

. Pick a seventh point

on

, away from those six, and let

and

. Then we see that the locus of the degree 3 polynomial

intersects the degree 2 conic in at least 7 points (namely,

and

), greater than the expected number

, which is impossible unless the loci of

and

have a component in common. But the conic

has just one component — itself — so one can conclude that its defining degree 2 polynomial (I’ll call it

) must divide

. Then we have

for some degree 1 polynomial , so the last three of the nine points of intersection

, which are zeroes of

and

, must be zeroes of the linear polynomial

, and hence are collinear. Thus we obtain Pascal’s Mystic Hexagon, version A.

It’s clear then that what makes the Mystic Hexagon tick has something to do with the geometry of cubic curves. With that in mind, I’m now going to kick the discussion up a notch, and relate a third rather more sophisticated construction on cubics which basically subsumes the first two constructions. It has to do with so-called “elliptic curves“.

Officially, an elliptic curve is a smooth projective (irreducible) cubic “curve” over the complex numbers. I put “curve” in quotes because while it is defined by an equation where

is a polynomial of degree 3, the coefficients of

are complex numbers as are the solutions to this equation. We say “curve” in the sense that locally it is like a “line”, but this is the complex line

we’re talking about, so from our real number perspective it is two-dimensional — it actually looks more like a surface. Indeed, an elliptic curve is an example of a Riemann surface. It would take me way too far afield to give explanations, but when you study these things, you find that elliptic curves are Riemann surfaces of genus 1. In more down-to-earth terms, this means they are tori (toruses), or doughnut-shaped as surfaces. Topologically, such tori or doughnuts are cartesian products of two circles.

Now a circle or 1-dimensional sphere carries a continuous (abelian) group structure, if we think of it as the set of complex numbers

of norm 1, where the group operation is complex multiplication. A torus

also carries a group structure, obtained by multiplying in each of the two components. Thus, given what we have said, an elliptic curve also carries a continuous group structure. But it’s actually much better than that: one can define a group structure on a smooth complex cubic

(in the complex plane

, or rather the projective complex plane

) not just by continuous operations, but by polynomially defined operations, and the definition of the group law is just incredibly elegant as a piece of geometry. Writing the group multiplication as addition, it says that if

are points on

, then

if are collinear. [To be precise, one must select a point 0 on

to serve as identity, and this point must be one of the nine inflection points of

. When

and

coincide (are “infinitesimally close”), the line through

and

is taken to be tangent to

; when

coincide, this is a line of inflection.]

This is rather an interesting thing to prove, that this prescription actually satisfies the axioms for an abelian group. The hardest part is proving associativity, but this turns out to be not unlike what we did for Pascal’s Mystic Hexagon: basically it’s an application of Bézout’s theorem again. (In algebraic geometry texts, such as Hartshorne’s famous book, the discussion of this point can be far more sophisticated, largely because one can and does define elliptic curves as certain abstract 1-dimensional varieties or schemes which have no presupposed extrinsic embeddings as cubic curves in the plane, and there the goal is to understand the operations intrinsically.)

In the special case where is defined by a cubic polynomial with real coefficients, we can look at the locus of real solutions (or “real points”), and it turns out that this prescription for the group law still works on the real locus, in particular is still well-defined. (Basically for the same reason that if you have two real numbers

which are solutions to a real cubic equation

, then there is also a third real solution

.) There is still an identity element, which will be an inflection point of the cubic.

Okay, here is a third solution to the problem, lifted from one of Noam Elkies’ emails. (The original formulation of the problem spoke in terms of “red” points (instead of my

),

“blue” points (instead of my

), and “blocking” points which play the role of my

.) The addition referred to is the addition law on an elliptic curve. I’ve taken the liberty of paraphrasing a bit.

“Choose points on the real points of an elliptic curve such that

is in-between

and

. Then set

- red points:

,

- blue points:

,

- blocking points:

,

where is a real point on the elliptic curve very close to the identity. The pair

,

is blocked by

, because these three points are collinear, and the smallness of P guarantees that the blocking point is actually between the red point and blue point, by continuity.”

Well, well. That’s awfully elegant. (According to Noam’s email, it came out of a three-way conversation between Roger Alperin, Joe Buhler, and Adam Chalcraft. Edit: Joe Buhler informs me in email that Joel Rosenberg’s name should be added. More at the end of this post.) Noam had given his own slick solution where again the red and blue points sit on a conic and the blocking points lie on a line not tangent to the conic, and he observed that his configuration was a degenerate cubic, leading him to surmise that his example could in a sense be seen as a special case of theirs.

How’s that? The last solution took place on a smooth (nondegenerate) cubic, so the degenerate cubic = conic+line examples could not, literally speaking, be special cases. Can the degenerate examples be seen in terms of algebraic group structures based on collinearity?

The answer is: yes! As you slide around in the space of planar cubics, nondegenerate cubics (the generic or typical case) can converge to cubics which are degenerate in varying degrees (including the case of three lines, or even a triple line), but the group laws on nondegenerate cubics based on collinearity converge to group laws, even in degenerate cases! (I hadn’t realized that.) You just have to be careful and throw away the singular points of the degenerate cubic, but otherwise you can basically still use the definition of the group law based on collineation, although it gets a little tricky saying exactly how you’re supposed to add points on a line component, such as the line of conic+line.

So let me give an example of how it works. It seems convenient for this purpose to use John Armstrong’s model which is based on the parabola+line, specifically the locus of . The singular points of its projective completion are at

and the point where the

-axis meets the line at infinity. After throwing those away, what remains is a disjoint union of four pieces: right half of parabola, left half of parabola, positive

-axis, negative

-axis.

We can maybe guess that since implies

collinear, that the two pieces of the

-axis form a subgroup for the group law we are after (also, these two pieces together should suggest the two halves of the multiplicative group of nonzero reals

, but don’t jump to conclusions how this works!). If so, then we notice that if

and

lie on the parabola, then the line between them intersects the

-axis at a point

, so then the parabolic part would not be closed under multiplication.

One is then led to consider that the group structure of this cubic overall is isomorphic to the group , with the linear part identified somehow with the subgroup

, and the parabolic part with

.

I claim that the abelian group structure on the punctured -axis should be defined by

so that the identity element on the cubic is , and the inverse of

is

. The remainder of the abelian group structure on the cubic is defined as follows:

Undoubtedly this group law looks a bit strange! So let’s do a spot check. Suppose ,

, and

are collinear. Then it is easily checked that

, and each of the two equations

is correct according to the group law, so the three collinear points do add to the identity and everything checks out.

All right, let’s retrieve John’s example as a special case. Take as a red point,

as a blue point, and

as blocking point. Take a point

“sufficiently close” to the identity, say

. Then

which was John’s solution.

Another long post from yours truly. I was sorry no solutions came from our readers, but if you’d like another problem to chew on, here’s a really neat one I saw just the other day. Feel free to discuss in comments!

Exercise: Given a point on a circle, show how to draw a tangent to the point using ruler only, no compass. Hint: use a mystic hexagon where two of the points are “infinitesimally close”.

Added July 25: As edited in above, Joel Rosenberg also had a hand in the elliptic curves solution, playing an instrumental role in establishing some of the conjectures, such as that is the minimal number of blocking points under certain assumptions, and (what is very nice) that the elliptic curves solution is the most general solution under certain assumptions. I thank Joe Buhler for transmitting this information.

It’s been an awfully long time since I’ve posted anything; time to finally break the silence.

This problem appeared elsewhere on the internet some months ago; some of you may have already seen it. I don’t want to say right away where I saw it, because there was some commentary which included some rough hints which I don’t want to give, but I’ll be sure to give credit when the solution is published. I’ll bet some of you will be able to find a solution, and will agree it’s quite cute. Here it is:

Given integers

, show that it is possible to construct a set of

points in the plane, let’s say

, so that no three points of the set are collinear, and for which there exist points

, all lying on a straight line, and arranged so that on the line between any

and any

, some

lies between them.

So no can “see” any

, because there’s always some

blocking the view. As the proposer observed, the problem would be easy if we had

‘s to play with, one for each pair

. But here there are only

‘s, so some of them will have to do more than their fair share, blocking the view between quite a few

-pairs simultaneously. Thus, you have to arrange the

‘s and

‘s so that a lot of the lines between them will be coincident at a point

, and subject to the constraint I italicized above.

Please submit solutions to topological[dot]musings[At]gmail[dot]com by Friday, July 17, 11:59 pm (UTC); do not submit solutions in Comments. Everyone with a correct solution will be inducted into our Hall of Fame! We look forward to your response.

The solutions are in! This problem of the week was interesting for me: the usual pattern has been that I pose problems that I’ve solved myself at some point in the past, giving me a kind of “inside edge” on understanding the solutions that come in. But, as I said in POW-12, the difference this time is that the solution I knew of came from someone else (Arin Chaudhuri). What was interesting for me — and given the circumstances, it was probably inevitable — is that some of the solutions we received forced me to make contact with some mathematics I’d only heard about but never studied. Let me get to that in a minute.

Another interesting thing for me — speaking now with my category theorist’s hat on — is how utterly simple and conceptual Arin’s proof was! I was pleased to see that regular problem solver Philipp Lampe also spotted it. Wait for it…

Solution I by Philipp Lampe, University of Bonn: The answer is 8.

Among the eight neighbors of an arbitrary vertex, all colors of an admissible coloring must occur. Thus, 8 is an upper bound for the maximum number of colors one can use. We have to show that there is an admissible coloring with eight colors.

The vertices of the 8-dimensional cube may be represented by vectors with

in

, in other words as vectors in

, the 8-dimensional vector space over the field

with two elements (i.e., the integers modulo 2). Two such vectors

are neighbors iff their coordinate vectors differ in exactly one place, in other words if

considered modulo 2, where

is one of the eight standard basis elements (with

-th coordinate 1, and the other coordinates 0).

Now let our “colors” be the 8 elements of

, the 3-dimensional vector space over

. Let the vertex “coloring”

be the unique -linear map such that

; that is, define the color of a vertex/vector by

Now, if is any vector, the colors of its neighbors are

. The colors of these neighbors are all distinct since the

are distinct. Hence all 8 colors are represented among the colors of the neighbors of any given vertex

, QED.

What I love about this solution it is how natural it is. I’ll say a little more about this in remarks below.

But I also learned a thing or two by studying the next solution. It relies on the theory of Hamming codes, with which I was not conversant. Up to some small edits, here is exactly what Sune Jakobsen submitted:

Solution II by Sune Jakobsen (first-year student), University of Copenhagen: Since each vertex only has 8 neighbors, the answer cannot be greater that 8.

Now we construct such a coloring with 8 colors. An 8-dimensional cube can be represented by the graph with vertices in , and with an edge between them iff the Hamming distance between them is 1. We color a vertex with color 8 if the seven first bits in the vertex is a “correct” Hamming message (cf. Hamming code (7,4)), and color it in color

if the first seven bits give a correct Hamming message upon changing bit

. This is a well-defined coloring, since each element in

is either a correct Hamming message, or is Hamming distance 1 away to exactly one correct Hamming message.

It remains to show that no vertex is neighbor to two vertices of the same color. The Hamming distance between these two vertices is 2, thus the Hamming distance between the first 7 bits of two neighbors must be 1 or 2. If two neighbors had the same color , then by definition one would get two correct Hamming messages by changing bit

in both of them, and the Hamming distance between these messages would be the same as before — either 1 or 2. But the distance between any two correct Hamming messages is at least 3. Contradiction.

Remarks:

1. Let me give a little background to Sune’s solution. Mathematically, the Hamming code called “(7, 4)” is the image of injective linear map

given by the matrix

1101

1011

1000

0111

0100

0010

0001

The Hamming code is what is known as an “error-correcting code”. Imagine that you want to send a 4-bit message (each bit being a 0 or 1) down a transmission line, but that due to noise in the line, there is the possibility of a bit being flipped and the wrong message being received. What can you do to help ensure that the intended message is received?

The answer is to add some “parity check bits” to the message. In the code (7, 4), one adds in three parity checks, so that the transmitted message is 7 bits long. What one does is apply the matrix to the 4-vector, to get a 7-vector (remember we’re working modulo 2), and this 7-vector is sent down the line. Assuming only a moderate amount of noise in the transmission line, perhaps the 7-bit message will remain intact or perhaps a single bit will be flipped, more rarely two bits will be flipped (and we’ll assume the event that more than two are flipped has negligible probability). Now, the Hamming encoding

is rigged up so that if the 7-bit vector is received as sent, then the parity checks will report 0 errors. If the parity checks report an error, they report precisely where the error occurred if the received vector was off by one bit. (If two flips occurred, then the receiver can still detect from the parity checks that an error occurred. The parity checks can never detect how many bits were flipped, but if the receiver assumes correctly that just one bit got flipped, he can restore the intended message with accuracy. If three or more got flipped, then the receiver got the wrong message, but he would never know it if the parity checks reported back 0 errors.)

How does this work? By having the receiver apply a parity-check matrix to the received message, given by the

matrix

1010101

0110011

0001111

In the first place, , and so if the message received belongs to the image of

(is a “correct Hamming message” in the terminology of Solution II), which will indeed be the case if there were no errors in the transmission, then

applied to the message will produce the zero vector. In the case where one bit got flipped in the transmission,

applied to the received vector will return a nonzero 3-vector, but the beauty of the Hamming code is that the 3-vector will spell out in binary the bit the error occurred (for example, if the output vector is

, then error occurred in the bit with binary number 011, that is bit 3). In that case, the receiver flips that bit to restore the original message. In these two cases, the original 4 bits are then read off as the 3rd, 5th, 6th, and 7th coordinates of the (possibly corrected) 7-vector.

By the way, Sune reminded us that Hamming codes also came up in a post by Greg Muller over at The Everything Seminar, who used the existence of a Hamming code in every dimension to solve general hat-guessing puzzles.

2. Within minutes of posting the original problem, we received a message from David Eppstein, who reported that the solution of 8 colors is essentially contained in a paper he coauthored with T. Givaris (page 4); his solution is close in spirit to Sune’s.

Arin Chaudhuri also surmised the connection to Hamming codes, and mentions that the problem (in a slightly different formulation) originally came from a friend of a friend, who blogs about a number of related problems on this page. Presumably the author had things like error-correcting codes in mind.

3. Sune and David noted that their solutions generalize to show that on the -dimensional cube (for any

), it is possible to color the vertices with

colors (the maximum number possible) so that all of the colors show up as colors of the neighbors of any given vertex.

This is very easy to see by adapting Philipp’s method. Indeed, for each just take the color set to be the

-vector space

. The set of vertices of the

-dimensional cube may identified with the

-vector space

generated by the set

(as basis), and the desired coloring is then just the

-linear map

that extends the identity function on

. As mathematical constructions go, you can’t get much more canonical than that! No fuss, no muss — it’s a purely categorical construction (categorists call it a counit of an adjunction). So then why didn’t I see that myself? 🙂

4. We’re still not sure what the story is in other dimensions. If is the maximum number of admissible colors in dimension

, then about all any one of us knows right now is that

for

,

is weakly monotone increasing, and that

if

is not a power of 2. There’s a conjecture afloat that

where

is the floor function, but for now that should be treated as a wild guess. If anyone has further information, please let us know!

Solved by Arin Chaudhuri, David Eppstein, Sune Jakobsen, and Philipp Lampe. Thanks to all those who wrote in!

Happy holidays, folks! And happy birthday to Topological Musings, which Vishal started just a little over a year ago — we’ve both had a really good time with it.

And in particular with the Problem of the Week series. Which, as we all know, doesn’t come out every week — but then again, to pinch a line from John Baez’s This Week’s Finds, we never said it would: only that each time a new problem comes out, it’s always the problem for that week! Anyway, we’ve been very gratified by the response and by the ingenious solutions we routinely receive — please keep ’em coming.

This problem comes courtesy of regular problem-solver Arin Chaudhuri and — I’ll freely admit — this one defeated me. I didn’t feel a bit bad though when Arin revealed his very elegant solution, and I’d like to see what our readers come up with. Here it is:

What is the maximum number of colors you could use to color the vertices of an 8-dimensional cube, so that starting from any vertex, each color occurs as the color of some neighbor of that vertex? (Call two vertices neighbors if they are the two endpoints of an edge of the cube.)

Arin, Vishal, and I would be interested and pleased if someone solved this problem for all dimensions .

Please submit solutions to topological[dot]musings[At]gmail[dot]com by Friday, January 2, 2009, 11:59 pm (UTC); do not submit solutions in Comments. Everyone with a correct solution will be inducted into our Hall of Fame! We look forward to your response.

The solutions to POW-11 are in! Or make that solution, unless you also count your hosts’: only one reader responded. Perhaps everyone has been distracted by the upcoming US presidential election?!

Composite solution by Philipp Lampe, University of Bonn, and Todd and Vishal: We claim there are exactly two functions which satisfy the identity

: the identity function and the function which is identically zero.

Clearly forces

, whence

. Therefore

or

, and the claim above follows if we show that

for all

. The first few cases are built up as follows:

where in a few places we have invoked the simple lemma that [since

]. The remainder of the argument proceeds by strong induction. For odd

, we have

Since preserves sums of two squares, we derive

Therefore, using the inductive hypothesis that for

, we have

From equation (1) we also have

Comparing equations (2) and (3), we infer . In particular,

for

, and we have the case

from before. This will be used to start the induction for even

, starting from

.

For even , where

, we have

and by arguing as we did above, we derive the two equations

where the second follows from the first by inductive hypothesis. Multiplying (4) through by and comparing with the last equation, we conclude

, and this completes the proof.

Remarks:

The underlying idea is to exploit the fact that many numbers can be expressed as a sum of two squares in more than one way, so that one can generate enough relations of the form to nail down all the

[in terms of

]. A powerful way of generating equations of the form

is to consider prime factorizations in the ring of Gaussian integers

. For example, in the Gaussian integers we have the prime factorizations

so that there are two ways of writing their product 65 in the form :

Here we have, respectively, ,

, whence

. Extrapolating from this, if we set

then we have , whence we derive

This gives a large supply of available relations to work with, and from there it is not too bad to get an inductive argument up and flying, once some base cases have been cleared out of the way with some numerical experimentation.

[Although it’s a little unusual to need such an outlay of base cases (up to at least ) before one can start the induction; it creates a psychological hurdle, I feel. It reminds me of POW-6, where no complete solutions were received: one has to fiddle with a number of base cases before the general argument can be pushed through.]

Incidentally, while researching this problem, I found the wonderful website MathPages, created by an all but anonymous author named Kevin Brown. I really recommend checking out this website, and I hope that it is somehow kept alive: it would be a shame for such a treasure trove to be buried and forgotten in some deep hollow of cyberspace.

Time for another problem of the week! This one doesn’t necessarily require any number-theoretic knowledge, although a little bit might help point you in the right direction. As usual, let be the set of natural numbers, i.e., the set of nonnegative integers.

Describe all functions

such that

for all

.

Please submit solutions to topological[dot]musings[At]gmail[dot]com by Sunday, November 2, 11:59 pm (UTC); do not submit solutions in Comments. Everyone with a correct solution will be inducted into our Hall of Fame! We look forward to your response.

The solutions are in! As is so often the case, solvers came up with a number of different approaches; the one which follows broadly represents the type of solution that came up most often. I’ll mention some others in the remarks below, some of it connected with the lore of Richard Feynman.

Solution by Nilay Vaish: The answer to POW-10 is . Put

and integrate by parts: we have

The first term vanishes by a simple application of L’hôpital’s rule. We now have

where the second equation follows from , and the general elementary fact that

Adding the last two displayed equations, we obtain

This gives

after a simple substitution. The last integral splits up as two integrals:

but these two integrals are equal, using the identity together with the general integration fact cited above. Hence the two sides of (3) equal

recalling equation (1) above. Substituting this for the last integral in equation (2), we arrive at

whence we derive the value of the desired integral .

Remarks:

1. A number of solvers exploited variations on the theme of the solution above, which could be summarized as involving symmetry considerations together with a double angle formula. For example, Philipp Lampe and Paul Shearer in their solutions made essential use of the identity

in conjunction with the complementarity , and the general integration fact cited above.

2. Arin Chaudhuri (and Vishal in private email) pointed out to me that the evaluation of the integral

is actually fairly well-known: it appears for example in Complex Analysis by Ahlfors (3rd edition, p. 160) to illustrate contour integration of a complex analytic function via the calculus of residues, and no doubt occurs in any number of other places.

3. Indeed, Simon Tyler in his solution referred to this as an integral of Clausen type, and gave a clever method for evaluating it: we have

which works out to

The last integral can be expanded as a series

where the summands for odd vanish; the other terms can be collected and then resummed to yield

and by substituting this for the integral in (*), the original integral is easily evaluated.

4. Arin C. later described still another method which he says he got from an exercise in a book by Apostol — it’s close in spirit to the one I myself had in mind, called “differentiation under the integral sign”, famously referred to in Surely You’re Joking, Mr. Feynman!. As Feynman recounts, he never really learned fancy methods like complex contour integration, but he did pick up this method of differentiating under the integral sign from an old calculus book. In typical Feynman style, he writes,

“It turns out that’s not taught very much in the universities; they don’t emphasize it. But I caught on how to use that method, and I used that one damn tool again and again. So because I was self-taught using that book [Wilson’s Advanced Calculus], I had peculiar methods of doing integrals. The result was, when guys at MIT or Princeton had trouble doing a certain integral, it was because they couldn’t do it with the standard methods they had learned in school. If it was contour integration, they would have found it; if it was a simple series expansion, they would have found it. Then I come along and try differentiating under the integral sign, and often it worked. So I got a great reputation for doing integrals, only because my box of tools was different from everybody else’s, and they had tried all their tools on it before giving the problem to me.”

So, what is this method? Wikipedia has a pretty good article on it; the idea is to view a given definite integral as an instance of a smoothly parametrized family

of integrals, where the extra parameter

is inserted at some judicious spot, in such a way that

has an easy-to-integrate derivative. Then one figures out

, and evaluates it at the

which yields the original definite integral.

The best way to understand it is through an example. Pretend you’re Feynman, and someone hands you

[I suppose there may be a contour integration method to handle that one, but never mind — you’re Feynman now, and not supposed to know how to do that!] After fiddling around a little, you find that by inserting a parameter in the exponent,

this has a very simple derivative:

i.e., by differentiating under the integral sign, you manage to kill off that annoying factor in the denominator:

We therefore have

and notice that from the original definition of , we have

. Thus

, and the problem integral evaluates to

. Bam!

It takes a little experience to judge where or how to stick in the extra parameter to make this method work, but the Wikipedia article has some good practice problems, including the integral of POW-10. For this problem they recommend considering

and I’ll let you the reader take it from there.

The point is not that this method is much more elegant than others, but that a little practice with it should be enough to convince one that it’s incredibly powerful, and often succeeds where other methods fail. (Not always, of course, and Feynman had to concede defeat when in response to a challenge, his pal Paul Olum concocted some hellacious integral which he said could only be done via contour integration.)

Doron Zeilberger is also fond of this method (as witnessed by this paper). Something about it makes me wonder whether it’s secretly connected with the idea of “creative telescoping” and the powerful methods (e.g., see here) developed by Gosper, Wilf, Zeilberger, and others to establish identities of hypergeometric type. But I haven’t had time to consider this carefully.

Also solved by Arin Chaudhuri, Philipp Lampe (University of Bonn), Paul Shearer (University of Michigan), and Simon Tyler. Thanks to all who wrote in!

Another problem of the week has been long overdue. Here’s one that may appeal to those who liked POW-5 (maybe we should have an integration problem every fifth time?):

Evaluate

Please submit solutions to topological[dot]musings[At]gmail[dot]com by Friday, October 10, 11:59 pm (UTC); do not submit solutions in Comments. Everyone with a correct solution will be inducted into our Hall of Fame! We look forward to your response.

The solutions are in! Last week’s POW-9 is a problem for which you need a bit of insight to solve at all (even with calculator or computer), and then a bit more to solve elegantly without machine aid.

Solution by Philipp Lampe, University of Bonn: The answer is . The decimal expansion of 1/65537 is eventually periodic with (minimum) period length

. Suppose it takes the form

for integers and

. Then

, and therefore

; equivalently

. The minimum

such that

(i.e., the order of 10 modulo 65537) must then divide

. On the other hand, from

, we derive

Thus by minimality of , we conclude

, and that the decimal expansion is periodic (not merely eventually periodic).

It is well known that is prime (it is the largest known Fermat prime), so by Fermat’s little theorem, the order of 10 modulo

divides

(where

is the Euler phi-function), and so it must be a power of 2, at most

. That the order is in fact

can be checked either by brute force (by repeated squaring and reduction modulo 65537, best carried out with calculator or computer), or by the following number-theoretic argument.

Since is prime, the multiplicative group of nonzero elements of the field

is cyclic (is generated by a single element, say

). Hence

where

, and

itself is a generator (has order 65536) iff

is prime to 65536, i.e., iff

is odd. This occurs iff 10 is a non-square modulo

. Defining the Jacobi symbol by the usual formula

(so that iff

is a square modulo

), we have

The second factor is, by the law of quadratic reciprocity (and the fact ),

Also, by the “second supplement“, we compute . Hence

: 10 is a non-square as desired. This completes the proof.

Remarks:The argument in the first paragraph of Philipp’s solution proves for 1/65537 a fact which holds generally for any reduced fraction where

is prime to 10: that the decimal expansion is actually periodic, not just eventually periodic. Henry Wilton also took the time and trouble to argue this point. I’m not sure how widely known that is, so I was glad the point was made; of course the main thing we need from that paragraph is the general fact that the period length of

equals the order of 10 modulo

.

A number of solvers computed the order of 10 by brute force, which I accepted. The use of quadratic reciprocity is slick but gets into rather deeper waters. As the Wikipedia article explains, this law was known to (or at least conjectured by) Euler and Legendre, but resisted a complete proof until Gauss found one after an intensive effort; it was one of his proudest achievements, and a theorem he returned to over the decades, giving over half a dozen more proofs in his life. Now more than 200 proofs are known. One proof that I like was given by Zolotarev, which is given a nice account in an appendix to John Conway’s very entertaining book, The (Sensual) Quadratic Form. Arin Chaudhuri pointed out to me that it’s also given as an exercise in Knuth’s The Art of Computer Programming (Vol. I, p. 45, exercise 47).

The “second supplement” was, according to the Wikipedia article, first proved by Euler. Its use here can be circumvented by observing that 2 cannot have order since already

, so that by Lampe’s argument, 2 is a square mod

, i.e.

.

Quadratic reciprocity is no isolated curiosity: most of the deeper, more conceptual treatments involve some nontrivial algebraic number theory, and the efforts to formulate reciprocity laws for higher powers had a lot to do with the creation of class field theory, culminating in Artin reciprocity, one of the crowning achievements of early 20th century mathematics.

Also solved by Arin Chaudhuri, Ashwin Kumar, Vladimir Nesov, Américo Tavares, Nathan Williams, and Henry Wilton. Thanks to all who wrote in!

Recent Comments